Reduce development costs and time by caching model responses to avoid redundant API calls: perfect for testing, iteration, and cost-conscious development.

Overview

Response caching eliminates redundant API calls by storing model responses locally, dramatically reducing both costs and response times during development and testing.

Agno provides two complementary caching strategies:

- Response Caching: Caches the entire model response locally to avoid repeated API calls

- Prompt Caching: Caches system prompts on the provider's side to reduce processing costs (Anthropic, OpenAI, OpenRouter)

When to Use

- Development and testing workflows

- Unit testing with consistent results

- Cost optimization during prototyping

- Rate limit management

- Offline development

Do not use response caching in production for dynamic content or when you need fresh, up-to-date responses for each query.

Prerequisites

- Python 3.7 or higher

- Agno library (

pip install -U agno) - API keys for your model provider

- Basic understanding of Agno Agents

Response Caching

Response caching is configured at the model level. See the full documentation for details.

Enable Caching

from agno.agent import Agent

from agno.models.openai import OpenAIChat

agent = Agent(model=OpenAIChat(id="gpt-4o", cache_response=True))

# First call - cache miss (calls API)

response = agent.run("Write a short story about a cat.")

print(f"First call: {response.metrics.duration:.3f}s")

# Second call - cache hit (instant)

response = agent.run("Write a short story about a cat.")

print(f"Cached call: {response.metrics.duration:.3f}s")

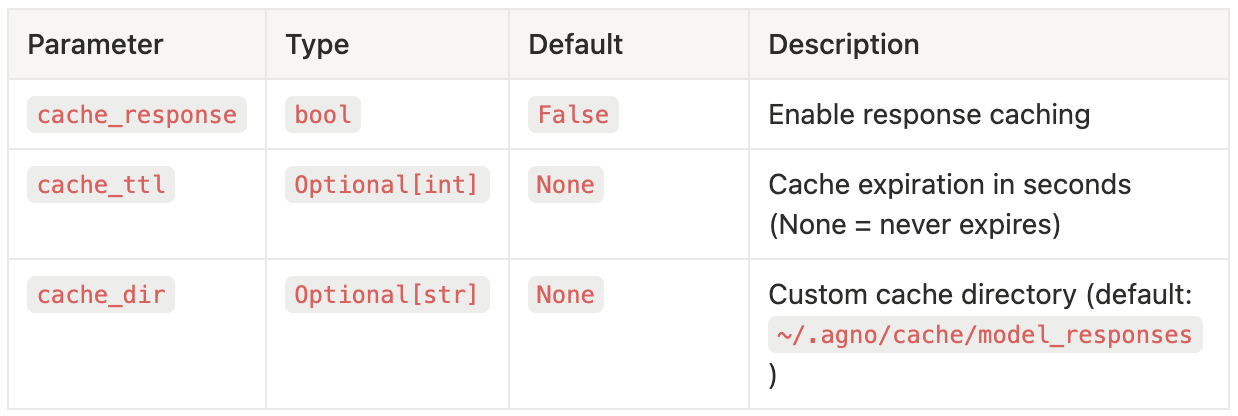

Configuration Options:

cache_response: Enable/disable caching (default:False)cache_ttl: Cache expiration in seconds (default: never expires)cache_dir: Custom cache location (default:~/.agno/cache/model_responses)

Example with options:

agent = Agent(

model=OpenAIChat(

id="gpt-4o",

cache_response=True,

cache_ttl=3600, # Cache expires after 1 hour

cache_dir="./cache" # Custom cache directory

)

)Prompt Caching

Prompt caching reduces costs by caching system prompts on the provider's side. Learn more about context caching.

Anthropic Prompt Caching

System prompts must be at least 1024 tokens. See Anthropic's documentation.

from agno.agent import Agent

from agno.models.anthropic import Claude

# Your large system prompt (must be 1024+ tokens)

system_message = "Your comprehensive system prompt here..."

agent = Agent(

model=Claude(

id="claude-sonnet-4-20250514",

cache_system_prompt=True # Enable prompt caching

),

system_message=system_message,

)

# First run - creates cache

response = agent.run("Explain REST APIs")

print(f"Cache write tokens: {response.metrics.cache_write_tokens}")

# Second run - uses cached prompt

response = agent.run("Explain GraphQL APIs")

print(f"Cache read tokens: {response.metrics.cache_read_tokens}")

Extended Cache Time (Beta): Extend duration from 5 minutes to 1 hour:

agent = Agent(

model=Claude(

id="claude-sonnet-4-20250514",

default_headers={"anthropic-beta": "extended-cache-ttl-2025-04-11"},

cache_system_prompt=True,

extended_cache_time=True # Extend to 1 hour

),

system_message=system_message,

)

OpenAI & OpenRouter

Prompt caching happens automatically when supported. See OpenAI and OpenRouter documentation.

from agno.agent import Agent

from agno.models.openai import OpenAIChat

# Prompt caching happens automatically for supported models

agent = Agent(model=OpenAIChat(id="gpt-4o"))

Advanced Usage

Caching with Teams

Enable caching on team members and the team leader:

from agno.agent import Agent

from agno.team import Team

from agno.models.openai import OpenAIChat

# Create agents with caching

researcher = Agent(

name="Researcher",

role="Research and gather information",

model=OpenAIChat(id="gpt-4o", cache_response=True),

)

writer = Agent(

name="Writer",

role="Write clear and engaging content",

model=OpenAIChat(id="gpt-4o", cache_response=True),

)

# Create team with caching

team = Team(

members=[researcher, writer],

model=OpenAIChat(id="gpt-4o", cache_response=True),

)

# First run - slow, second run - instant from cache

team.print_response("Research AI trends and write a summary")

Combining Both Caching Types

Maximize savings by using response and prompt caching together:

agent = Agent(

model=Claude(

id="claude-sonnet-4-20250514",

cache_response=True, # Local response caching

cache_system_prompt=True, # Provider-side prompt caching

cache_ttl=7200 # 2 hour cache duration

),

system_message="Your large system prompt...",

)Monitoring

Track cache performance using metrics:

response = agent.run("Your query")

# Response caching metrics

print(f"Duration: {response.metrics.duration:.3f}s")

# Prompt caching metrics (Anthropic)

print(f"Cache read tokens: {response.metrics.cache_read_tokens}")

print(f"Cache write tokens: {response.metrics.cache_write_tokens}")Learn more about metrics in Agno.

Configuration Reference

Response Caching Parameters

Prompt Caching Parameters (Anthropic)

Key Takeaways

- Response caching saves entire responses locally - use during development

- Prompt caching saves system prompts on provider side - use with large prompts (1024+ tokens)

- Combine both for maximum cost savings

- Never use in production for user-facing dynamic content

- Monitor metrics to verify caching effectiveness

Resources

Documentation:

Examples:

Support: